To simulate a world is almost a divine act. One Jewish tradition holds that there were multiple worlds created and destroyed before our own. They didn’t quite work out—beta versions perhaps?—and so the universe was restarted again and again, until we got to the current version.1

But we will never simulate a world perfectly. First we must contend with the wrench of chaos theory—butterflies flapping and the inherent imprecision of measurement and all that—which has demonstrated that systems more complicated than a swinging pendulum can cause computational simulations to rapidly diverge over time despite small changes in the initial conditions. Start with a tiny rounding error, or a measurement mistake, and there’s no guarantee that a prediction will be anywhere close to where the system will actually end up.

But there is also something that might be called the Kitchen Sink Conundrum: as more and more detail—both in modeling features and data—is thrown into a simulation, there is no guarantee that it will get us closer to a good understanding of reality itself (see also kitchen sink regression).

Decades ago, in a RAND Corporation report from 1979 by David Leinweber, entitled “Models, Complexity, and Error,” this was clearly articulated. This report examined two types of error: error of measurement and error of specification.

Error of specification refers to how accurate the model is in accounting for the richness of the system being modeled. A more sophisticated model, with more operations on the input, will hopefully correspond better to the real world: it will be more accurate. So as the complexity of the model is increased, it will adhere better to reality and there will be a lower error of specification (though there may be diminishing returns).

On the other hand, there is also error of measurement. The more complex a model, the more likely that any measurement error will compound, and cause the outputs to be wildly inaccurate (hints of chaos theory?). As Leinweber notes, “As the models grow larger and more complex, the compounded error in the prediction increases.”

So one curve goes down with complexity and the other goes up. Therefore, the overall error has a minimum: there is an “optimal model complexity” and “[f]urther complicating the model buys nothing.”

In fact, recognizing this tradeoff goes even further back in history, as the chart in this RAND report is based on a journal article from 1968: “Predicting Best with Imperfect Data” by William Alonso.

This tradeoff between complexity and accuracy is a humble realization. Do not add complexity in the hopes of greater verisimilitude, as not only can there be a diminishing return to this effort, but it could even be entirely counter-productive. Computational and mathematical models are powerful, but they must be used carefully.2

As Leinweber notes: “Weak data require simpler models.” Do not succumb to throwing the kitchen sink into a model as one’s default mode. ■

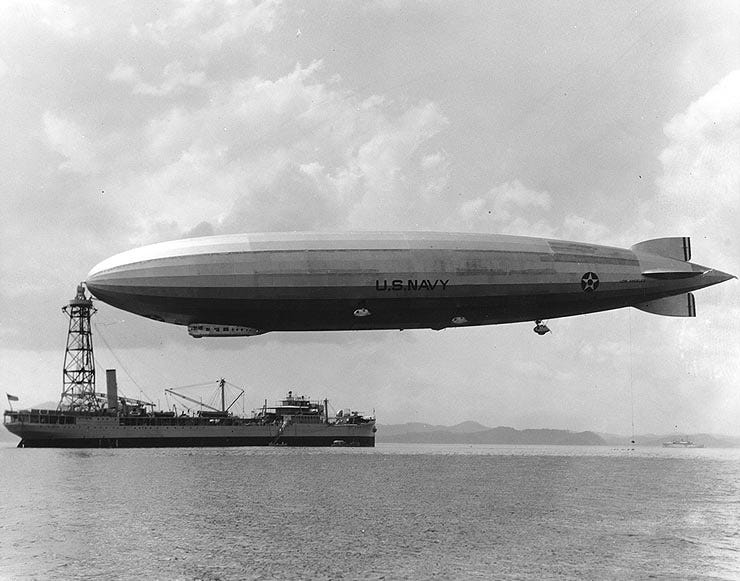

If you enjoy alternate history, you might enjoy Hite’s Law:

“All Change Points, from Xerxes to the last presidential election, create worlds with clean, efficient Zeppelin traffic. Changing history may produce Zeppelins as an inevitable by-product, much as bombarding uranium produces gamma rays. Often, the quickest way to tell if you are in an Alternate History is to look up, rather than at a newspaper or encyclopedia. From this premise, it is not outside the realm of Plausibility that our history between 1900 and 1936 was, in fact, an Alternate History. It would, at least, explain a lot.”

I recently appeared on the Sane New World podcast; go give it a listen!

The Enchanted Systems Roundup

Here are some links worth checking out that touch on the complex systems of our world (both built and natural):

🜸 Hey, Computer, Make Me a Font: “This is a story of my journey learning to build generative ML models from scratch and teaching a computer to create fonts in the process.”

🝳 Flow Lenia: Cellular automata and artificial life.

🝖 How ChatGPT and other AI tools could disrupt scientific publishing: “A world of AI-assisted writing and reviewing might transform the nature of the scientific paper.”

🜹 Whole Earth Index: Whole Earth Catalog (and associated magazines) have now been digitized and are available online.

🝊 How Will A.I. Learn Next? “As chatbots threaten their own best sources of data, they will have to find new kinds of knowledge.”

🜸 A single-serving waterhole in the Namib Desert using Remix

🜚 Lightning: a new organization that pairs nicely with the Modern Wisdom Literature Canon

🝳 Brains Accelerator: “a training program to provide the skills and opportunities to translate ambitious research visions that aren’t a good fit for a company but are too big for a single academic lab into impact. These visions could be anything from upending the way we make carbon-based products to how we understand the brain or build air- breathing fusion engines.”

🝤 How would we know whether there is life on Earth? This bold experiment found out: “Thirty years ago, astronomer Carl Sagan convinced NASA to turn a passing space probe’s instruments on Earth to look for life — with results that still reverberate today.”

🝖 Version Museum: “Version Museum showcases the visual history of popular websites, operating systems, applications, and games that have shaped our lives.”

🝳 AI as Sparkly Magic: “Given this widespread feeling, it’s perhaps not surprising that ✨sparkly✨ iconography has suddenly become much more common, especially as tech companies rush to find ways to integrate AI into their products.”

🜚 Humans Are Ready to Find Alien Life: “We finally have the tools we need. Now scientists just need to watch and wait.”

Until next time.

And after our current one was created, God discovered some further issues and a few software patches were issued: wiping out most of humanity and starting over with Noah’s family, as well as allowing the eating of meat.

Of course, even bad models can have their uses: the title of another paper from RAND is “Six (or So) Things You Can Do with a Bad Model.” As per this paper: “if the use of a bad model provides insight, it does so not by revealing truth about the world but by revealing its own assumptions and thereby causing its user to go learn something about the world.”

That was delightful, thank you.

Given that I am writing a book about our universe as a product of evolution (descended, through a Darwinian evolutionary process, from many earlier and more primitive universes), I am intrigued by this, in particular!

" One Jewish tradition holds that there were multiple worlds created and destroyed before our own. They didn’t quite work out—beta versions perhaps?—and so the universe was restarted again and again, until we got to the current version.¹"

It's interesting how many traditions intuited an evolved universe, with a long evolutionary history of earlier and more primitive ancestor-universes. For instance, the great British philosopher David Hume wrote, in 1779:

"Many worlds might have been botched and bungled, throughout an

eternity, 'ere this system was struck out. Much labour lost: Many

fruitless trials made: And a slow, but continued improvement carried out

during infinite ages in the art of world-making."

Somewhat similar sentiment... Of course, pre-Darwin, and thus pre-evolutionary theory, they couldn't come up with a mechanism to back up this intuition. After Darwin, philosophers like Charles Sanders Peirce could write:

"To suppose universal laws of nature capable of being apprehended by the mind and yet having no reason for their special forms, but standing inexplicable and irrational, is hardly a justifiable position. Uniformities are precisely the sort of facts that need to be accounted for. Law is par excellence the thing that wants a reason. Now the only possible way of accounting for the laws of nature, and for uniformity in general, is to suppose them results of evolution."

But of course both Darwin and Peirce still though we lived in an infinite and eternal universe. All evolution had to take place inside that one infinite, eternal thing. Only since the discovery of the expansion of the universe by Hubble, and of the Big Bang, and thus the fact that our universe came into being abruptly 13.8 billion years ago, could anyone conceive of the universe itself being the product of an evolutionary process...

Anyway, I'm rambling. But thanks again for that fascinating quote! It pushes even further back into the historical past the intuition that our universe evolved; I'll borrow it for my book, if I may.

And best of luck with your forthcoming book on the magic of code, which looks like being a gem.

That curve is the exact same one for queuing theory comparing the cost of idle capacity and the delay cost of doing new, unplanned things.

Still, I like to go back to the statement that "there are no laws of physics" (https://www.ias.edu/news/in-the-media/2018/dijkgraaf-laws-of-physics).